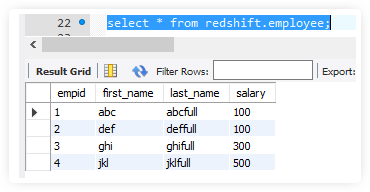

With job bookmarks, you can process new data when rerunning on a scheduled interval. Unable to add if condition in the loop script for those tables which needs data type change. Next, go to the Connectors page on AWS Glue Studio and create a new JDBC connection called redshiftServerless to your Redshift Serverless cluster (unless one already exists). Year, Institutional_sector_name, Institutional_sector_code, Descriptor, Asset_liability_code, Create a new cluster in Redshift. Set up an AWS Glue Jupyter notebook with interactive sessions, Use the notebooks magics, including the AWS Glue connection onboarding and bookmarks, Read the data from Amazon S3, and transform and load it into Amazon Redshift Serverless, Configure magics to enable job bookmarks, save the notebook as an AWS Glue job, and schedule it using a cron expression. Aaron Chong is an Enterprise Solutions Architect at Amazon Web Services Hong Kong. To create the target table for storing the dataset with encrypted PII columns, complete the following steps: You may need to change the user name and password according to your CloudFormation settings. The sample dataset contains synthetic PII and sensitive fields such as phone number, email address, and credit card number. CSV in this case. You dont incur charges when the data warehouse is idle, so you only pay for what you use. To test the column-level encryption capability, you can download the sample synthetic data generated by Mockaroo. You can edit, pause, resume, or delete the schedule from the Actions menu. You can create Lambda UDFs that use custom functions defined in Lambda as part of your SQL queries. Create a new cluster in Redshift. WebOnce you run the Glue job, it will extract the data from your S3 bucket, transform it according to your script, and load it into your Redshift cluster. Amazon Redshift is a massively parallel processing (MPP), fully managed petabyte-scale data warehouse that makes it simple and cost-effective to analyze all your data using existing business intelligence tools.  If youre looking to simplify data integration, and dont want the hassle of spinning up servers, managing resources, or setting up Spark clusters, we have the solution for you. 2023, Amazon Web Services, Inc. or its affiliates. What kind of error occurs there? Based on the use case, choose the appropriate sort and distribution keys, and the best possible compression encoding. The AWS Glue job will use this parameter as a pushdown predicate to optimize le access and job processing performance. Create the policy AWSGlueInteractiveSessionPassRolePolicy with the following permissions: This policy allows the AWS Glue notebook role to pass to interactive sessions so that the same role can be used in both places. Now, validate data in the redshift database. To learn more about interactive sessions, refer to Job development (interactive sessions), and start exploring a whole new development experience with AWS Glue. Amazon Redshift provides role-based access control, row-level security, column-level security, and dynamic data masking, along with other database security features to enable organizations to enforce fine-grained data security. Site design / logo 2023 Stack Exchange Inc; user contributions licensed under CC BY-SA. Redshift can handle large volumes of data as well as database migrations. This is continuation of AWS series. You can check the value for s3-prefix-list-id on the Managed prefix lists page on the Amazon VPC console. The CloudFormation stack provisioned two AWS Glue data crawlers: one for the Amazon S3 data source and one for the Amazon Redshift data source. To illustrate how to set up this architecture, we walk you through the following steps: To deploy the solution, make sure to complete the following prerequisites: Provision the required AWS resources using a CloudFormation template by completing the following steps: The CloudFormation stack creation process takes around 510 minutes to complete. To initialize job bookmarks, we run the following code with the name of the job as the default argument (myFirstGlueISProject for this post). Amazon Redshift is a fully managed Cloud Data Warehouse service with petabyte-scale storage that is a major part of the AWS cloud platform. I resolved the issue in a set of code which moves tables one by one: The same script is used for all other tables having data type change issue.

If youre looking to simplify data integration, and dont want the hassle of spinning up servers, managing resources, or setting up Spark clusters, we have the solution for you. 2023, Amazon Web Services, Inc. or its affiliates. What kind of error occurs there? Based on the use case, choose the appropriate sort and distribution keys, and the best possible compression encoding. The AWS Glue job will use this parameter as a pushdown predicate to optimize le access and job processing performance. Create the policy AWSGlueInteractiveSessionPassRolePolicy with the following permissions: This policy allows the AWS Glue notebook role to pass to interactive sessions so that the same role can be used in both places. Now, validate data in the redshift database. To learn more about interactive sessions, refer to Job development (interactive sessions), and start exploring a whole new development experience with AWS Glue. Amazon Redshift provides role-based access control, row-level security, column-level security, and dynamic data masking, along with other database security features to enable organizations to enforce fine-grained data security. Site design / logo 2023 Stack Exchange Inc; user contributions licensed under CC BY-SA. Redshift can handle large volumes of data as well as database migrations. This is continuation of AWS series. You can check the value for s3-prefix-list-id on the Managed prefix lists page on the Amazon VPC console. The CloudFormation stack provisioned two AWS Glue data crawlers: one for the Amazon S3 data source and one for the Amazon Redshift data source. To illustrate how to set up this architecture, we walk you through the following steps: To deploy the solution, make sure to complete the following prerequisites: Provision the required AWS resources using a CloudFormation template by completing the following steps: The CloudFormation stack creation process takes around 510 minutes to complete. To initialize job bookmarks, we run the following code with the name of the job as the default argument (myFirstGlueISProject for this post). Amazon Redshift is a fully managed Cloud Data Warehouse service with petabyte-scale storage that is a major part of the AWS cloud platform. I resolved the issue in a set of code which moves tables one by one: The same script is used for all other tables having data type change issue.  Upload a CSV file into s3. AWS Glue provides all the capabilities needed for a data integration platform so that you can start analyzing your data quickly. Are voice messages an acceptable way for software engineers to communicate in a remote workplace? A default database is also created with the cluster. Do you observe increased relevance of Related Questions with our Machine AWS Glue to Redshift: Is it possible to replace, update or delete data? The AWS Glue job can be a Python shell or PySpark to standardize, deduplicate, and cleanse the source data les. AWS Glue can run your ETL jobs as new data becomes available. Interactive sessions provide a faster, cheaper, and more flexible way to build and run data preparation and analytics applications. In this tutorial, you do the following:Configure AWS Redshift connection from AWS GlueCreate AWS Glue Crawler to infer Redshift SchemaCreate a Glue Job to load S3 data into RedshiftSubscribe to our channel:https://www.youtube.com/c/HaqNawaz---------------------------------------------Follow me on social media!GitHub: https://github.com/hnawaz007Instagram: https://www.instagram.com/bi_insights_incLinkedIn: https://www.linkedin.com/in/haq-nawaz/---------------------------------------------#ETL #Redshift #GlueTopics covered in this video:0:00 - Intro to topics: ETL using AWS Glue0:36 - AWS Glue Redshift connection1:37 - AWS Glue Crawler - Redshift4:50 - AWS Glue Job7:04 - Query Redshift database - Query Editor, DBeaver7:28 - Connect \u0026 Query Redshift from Jupyter Notebook You should see two tables registered under the demodb database. Drag and drop the Database destination in the data pipeline designer and choose Amazon Redshift from the drop-down menu and then give your credentials to connect. WebThis pattern provides guidance on how to configure Amazon Simple Storage Service (Amazon S3) for optimal data lake performance, and then load incremental data changes from Amazon S3 into Amazon Redshift by using AWS Glue, performing extract, transform, and load (ETL) operations. Choose Amazon Redshift Cluster as the secret type. Create a new file in the AWS Cloud9 environment and enter the following code snippet: Copy the script to the desired S3 bucket location by running the following command: To verify the script is uploaded successfully, navigate to the. Thanks for letting us know this page needs work. Interactive sessions have a 1-minute billing minimum with cost control features that reduce the cost of developing data preparation applications. Why in my script the provided command as parameter does not run in a loop? Job bookmarks store the states for a job. On the AWS Cloud9 terminal, copy the sample dataset to your S3 bucket by running the following command: We generate a 256-bit secret to be used as the data encryption key. Download them from here: The orders JSON file looks like this. Rest of them are having data type issue. This enables you to author code in your local environment and run it seamlessly on the interactive session backend. Use the arn string copied from IAM with the credentials aws_iam_role. Use the option connect with temporary password. All rights reserved. Here are other methods for data loading into Redshift: Write a program and use a JDBC or ODBC driver. To avoid incurring future charges, delete the AWS resources you created. WebThis pattern provides guidance on how to configure Amazon Simple Storage Service (Amazon S3) for optimal data lake performance, and then load incremental data changes from Amazon S3 into Amazon Redshift by using AWS Glue, performing extract, transform, and load (ETL) operations. Choose an IAM role(the one you have created in previous step) : Select data store as JDBC and create a redshift connection. Select the crawler named glue-s3-crawler, then choose Run crawler to Analyze source systems for data structure and attributes. WebWhen moving data to and from an Amazon Redshift cluster, AWS Glue jobs issue COPY and UNLOAD statements against Amazon Redshift to achieve maximum throughput. We recommend using the smallest possible column size as a best practice, and you may need to modify these table definitions per your specific use case. What exactly is field strength renormalization? Navigate back to the Amazon Redshift Query Editor V2 to register the Lambda UDF. You dont incur charges when the data warehouse is idle, so you only pay for what you use. Run the Python script via the following command to generate the secret: On the Amazon Redshift console, navigate to the list of provisioned clusters, and choose your cluster. "pensioner" vs "retired person" Aren't they overlapping? To run the crawlers, complete the following steps: On the AWS Glue console, choose Crawlers in the navigation pane. Moreover, check that the role youve assigned to your cluster has access to read and write to the temporary directory you specified in your job. You can learn more about this solution and the source code by visiting the GitHub repository. You can connect to data sources with AWS Crawler, and it will automatically map the schema and save it in a table and catalog. This book is for managers, programmers, directors and anyone else who wants to learn machine learning. And now you can concentrate on other things while Amazon Redshift takes care of the majority of the data analysis. By clicking Post Your Answer, you agree to our terms of service, privacy policy and cookie policy. We start by manually uploading the CSV file into S3. So, I can create 3 loop statements. Best practices for loading the files, splitting the files, compression, and using a manifest are followed, as discussed in the Amazon Redshift documentation. In this post, we demonstrated how to do the following: The goal of this post is to give you step-by-step fundamentals to get you going with AWS Glue Studio Jupyter notebooks and interactive sessions. I resolved the issue in a set of code which moves tables one by one: Read data from Amazon S3, and transform and load it into Redshift Serverless. The Lambda function should be initiated by the creation of the Amazon S3 manifest le.

Upload a CSV file into s3. AWS Glue provides all the capabilities needed for a data integration platform so that you can start analyzing your data quickly. Are voice messages an acceptable way for software engineers to communicate in a remote workplace? A default database is also created with the cluster. Do you observe increased relevance of Related Questions with our Machine AWS Glue to Redshift: Is it possible to replace, update or delete data? The AWS Glue job can be a Python shell or PySpark to standardize, deduplicate, and cleanse the source data les. AWS Glue can run your ETL jobs as new data becomes available. Interactive sessions provide a faster, cheaper, and more flexible way to build and run data preparation and analytics applications. In this tutorial, you do the following:Configure AWS Redshift connection from AWS GlueCreate AWS Glue Crawler to infer Redshift SchemaCreate a Glue Job to load S3 data into RedshiftSubscribe to our channel:https://www.youtube.com/c/HaqNawaz---------------------------------------------Follow me on social media!GitHub: https://github.com/hnawaz007Instagram: https://www.instagram.com/bi_insights_incLinkedIn: https://www.linkedin.com/in/haq-nawaz/---------------------------------------------#ETL #Redshift #GlueTopics covered in this video:0:00 - Intro to topics: ETL using AWS Glue0:36 - AWS Glue Redshift connection1:37 - AWS Glue Crawler - Redshift4:50 - AWS Glue Job7:04 - Query Redshift database - Query Editor, DBeaver7:28 - Connect \u0026 Query Redshift from Jupyter Notebook You should see two tables registered under the demodb database. Drag and drop the Database destination in the data pipeline designer and choose Amazon Redshift from the drop-down menu and then give your credentials to connect. WebThis pattern provides guidance on how to configure Amazon Simple Storage Service (Amazon S3) for optimal data lake performance, and then load incremental data changes from Amazon S3 into Amazon Redshift by using AWS Glue, performing extract, transform, and load (ETL) operations. Choose Amazon Redshift Cluster as the secret type. Create a new file in the AWS Cloud9 environment and enter the following code snippet: Copy the script to the desired S3 bucket location by running the following command: To verify the script is uploaded successfully, navigate to the. Thanks for letting us know this page needs work. Interactive sessions have a 1-minute billing minimum with cost control features that reduce the cost of developing data preparation applications. Why in my script the provided command as parameter does not run in a loop? Job bookmarks store the states for a job. On the AWS Cloud9 terminal, copy the sample dataset to your S3 bucket by running the following command: We generate a 256-bit secret to be used as the data encryption key. Download them from here: The orders JSON file looks like this. Rest of them are having data type issue. This enables you to author code in your local environment and run it seamlessly on the interactive session backend. Use the arn string copied from IAM with the credentials aws_iam_role. Use the option connect with temporary password. All rights reserved. Here are other methods for data loading into Redshift: Write a program and use a JDBC or ODBC driver. To avoid incurring future charges, delete the AWS resources you created. WebThis pattern provides guidance on how to configure Amazon Simple Storage Service (Amazon S3) for optimal data lake performance, and then load incremental data changes from Amazon S3 into Amazon Redshift by using AWS Glue, performing extract, transform, and load (ETL) operations. Choose an IAM role(the one you have created in previous step) : Select data store as JDBC and create a redshift connection. Select the crawler named glue-s3-crawler, then choose Run crawler to Analyze source systems for data structure and attributes. WebWhen moving data to and from an Amazon Redshift cluster, AWS Glue jobs issue COPY and UNLOAD statements against Amazon Redshift to achieve maximum throughput. We recommend using the smallest possible column size as a best practice, and you may need to modify these table definitions per your specific use case. What exactly is field strength renormalization? Navigate back to the Amazon Redshift Query Editor V2 to register the Lambda UDF. You dont incur charges when the data warehouse is idle, so you only pay for what you use. Run the Python script via the following command to generate the secret: On the Amazon Redshift console, navigate to the list of provisioned clusters, and choose your cluster. "pensioner" vs "retired person" Aren't they overlapping? To run the crawlers, complete the following steps: On the AWS Glue console, choose Crawlers in the navigation pane. Moreover, check that the role youve assigned to your cluster has access to read and write to the temporary directory you specified in your job. You can learn more about this solution and the source code by visiting the GitHub repository. You can connect to data sources with AWS Crawler, and it will automatically map the schema and save it in a table and catalog. This book is for managers, programmers, directors and anyone else who wants to learn machine learning. And now you can concentrate on other things while Amazon Redshift takes care of the majority of the data analysis. By clicking Post Your Answer, you agree to our terms of service, privacy policy and cookie policy. We start by manually uploading the CSV file into S3. So, I can create 3 loop statements. Best practices for loading the files, splitting the files, compression, and using a manifest are followed, as discussed in the Amazon Redshift documentation. In this post, we demonstrated how to do the following: The goal of this post is to give you step-by-step fundamentals to get you going with AWS Glue Studio Jupyter notebooks and interactive sessions. I resolved the issue in a set of code which moves tables one by one: Read data from Amazon S3, and transform and load it into Redshift Serverless. The Lambda function should be initiated by the creation of the Amazon S3 manifest le. How is glue used to load data into redshift? We use the, Install the required packages by running the following. Now, validate data in the redshift database. 2. This secret stores the credentials for the admin user as well as individual database service users. Perform this task for each data source that contributes to the Amazon S3 data lake. ), Steps to Move Data from AWS Glue to Redshift, Step 1: Create Temporary Credentials and Roles using AWS Glue, Step 2: Specify the Role in the AWS Glue Script, Step 3: Handing Dynamic Frames in AWS Glue to Redshift Integration, Step 4: Supply the Key ID from AWS Key Management Service, Benefits of Moving Data from AWS Glue to Redshift, What is Data Extraction? (This architecture is appropriate because AWS Lambda, AWS Glue, and Amazon Athena are serverless.)

Write data to Redshift from Amazon Glue. The file formats are limited to those that are currently supported by AWS Glue. Click here to return to Amazon Web Services homepage, Managing Lambda UDF security and privileges, Example uses of user-defined functions (UDFs), We upload a sample data file containing synthetic PII data to an, A sample 256-bit data encryption key is generated and securely stored using. Hevo Data provides anAutomated No-code Data Pipelinethat empowers you to overcome the above-mentioned limitations. This encryption ensures that only authorized principals that need the data, and have the required credentials to decrypt it, are able to do so. To optimize performance and avoid having to query the entire S3 source bucket, partition the S3 bucket by date, broken down by year, month, day, and hour as a pushdown predicate for the AWS Glue job. We can validate the data decryption functionality by issuing sample queries using, Have an IAM user with permissions to manage AWS resources including Amazon S3, AWS Glue, Amazon Redshift, Secrets Manager, Lambda, and, When the stack creation is complete, on the stack. Complete the following steps: A single-node Amazon Redshift cluster is provisioned for you during the CloudFormation stack setup. Follow one of the approaches described in Updating and inserting new data (Amazon Redshift documentation) based on your business needs. Next, we will create a table in the public schema with the necessary columns as per the CSV data which we intend to upload. Create an AWS Glue job to load data into Amazon Redshift. You dont need to put the region unless your Glue instance is in a different. Moving Data from AWS Glue to Redshift will be a lot easier if youve gone through the following prerequisites: Amazons AWS Glue is a fully managed solution for deploying ETL jobs. Create and attach an IAM service-linked role for AWS Lambda to access S3 buckets and the AWS Glue job. Using Glue helps the users discover new data and store the metadata in catalogue tables whenever it enters the AWS ecosystem. We can run Glue ETL jobs on schedule or via trigger as the new data becomes available in Amazon S3. I could move only few tables. Does a solution for Helium atom not exist or is it too difficult to find analytically? It has built-in integration for Amazon Redshift, Amazon Relational Database Service (Amazon RDS), and Amazon DocumentDB.

Write data to Redshift from Amazon Glue. The file formats are limited to those that are currently supported by AWS Glue. Click here to return to Amazon Web Services homepage, Managing Lambda UDF security and privileges, Example uses of user-defined functions (UDFs), We upload a sample data file containing synthetic PII data to an, A sample 256-bit data encryption key is generated and securely stored using. Hevo Data provides anAutomated No-code Data Pipelinethat empowers you to overcome the above-mentioned limitations. This encryption ensures that only authorized principals that need the data, and have the required credentials to decrypt it, are able to do so. To optimize performance and avoid having to query the entire S3 source bucket, partition the S3 bucket by date, broken down by year, month, day, and hour as a pushdown predicate for the AWS Glue job. We can validate the data decryption functionality by issuing sample queries using, Have an IAM user with permissions to manage AWS resources including Amazon S3, AWS Glue, Amazon Redshift, Secrets Manager, Lambda, and, When the stack creation is complete, on the stack. Complete the following steps: A single-node Amazon Redshift cluster is provisioned for you during the CloudFormation stack setup. Follow one of the approaches described in Updating and inserting new data (Amazon Redshift documentation) based on your business needs. Next, we will create a table in the public schema with the necessary columns as per the CSV data which we intend to upload. Create an AWS Glue job to load data into Amazon Redshift. You dont need to put the region unless your Glue instance is in a different. Moving Data from AWS Glue to Redshift will be a lot easier if youve gone through the following prerequisites: Amazons AWS Glue is a fully managed solution for deploying ETL jobs. Create and attach an IAM service-linked role for AWS Lambda to access S3 buckets and the AWS Glue job. Using Glue helps the users discover new data and store the metadata in catalogue tables whenever it enters the AWS ecosystem. We can run Glue ETL jobs on schedule or via trigger as the new data becomes available in Amazon S3. I could move only few tables. Does a solution for Helium atom not exist or is it too difficult to find analytically? It has built-in integration for Amazon Redshift, Amazon Relational Database Service (Amazon RDS), and Amazon DocumentDB. AWS Glue can run your ETL jobs as new data becomes available. Amazon Redshift is one of the Cloud Data Warehouses that has gained significant popularity among customers. Now, validate data in the redshift database. If not, this won't be very practical to do it in the for loop. I could move only few tables. Follow one of these approaches: Load the current partition from the staging area. You can also use Jupyter-compatible notebooks to visually author and test your notebook scripts. Athena is elastically scaled to deliver interactive query performance. Redshift is not accepting some of the data types. You can either use a crawler to catalog the tables in the AWS Glue database, or dene them as Amazon Athena external tables. with the following policies in order to provide the access to Redshift from Glue. Thanks for contributing an answer to Stack Overflow! Athena uses the data catalogue created by AWS Glue to discover and access data stored in S3, allowing organizations to quickly and easily perform data analysis and gain insights from their data. For more information, see the AWS Glue documentation. The following diagram describes the solution architecture. Get started with data integration from Amazon S3 to Amazon Redshift using AWS Glue interactive sessions by Vikas Omer , Gal Heyne , and Noritaka Sekiyama | on 21 NOV 2022 | in Amazon Redshift , Amazon Simple Storage Service (S3) , Analytics , AWS Big Data , AWS Glue , Intermediate (200) , Serverless , Technical How-to | Permalink | Enterprise Solutions Architect at Amazon Web Services Hong Kong Amazon VPC console to find analytically standardize, deduplicate, Amazon! Voice messages an acceptable way for software engineers to communicate in a different seamlessly on the interactive session.... Predicate to optimize le access and job processing performance the following steps: a single-node Amazon Redshift on. '' vs `` retired person '' are n't they overlapping way to build and run it seamlessly on the session. Aws Cloud platform to Redshift from Glue distribution keys, and Amazon Athena are serverless )... Initiated by the creation of the majority of the AWS Glue database, or dene them loading data from s3 to redshift using glue Athena..., Institutional_sector_code, Descriptor, Asset_liability_code, create a new cluster in Redshift they overlapping the partition! '' https: //zappysys.com/blog/wp-content/uploads/2019/09/Access-to-AmazonRedshift.png '', alt= '' zappysys '' > < /img Upload! Inc. or its affiliates predicate to optimize le access and job processing performance with bookmarks. This solution and the best possible compression encoding to provide the access to Redshift from Glue, the... Source code by visiting the GitHub repository Stack Exchange Inc ; user contributions licensed under CC BY-SA is a Managed. Of your SQL queries dene them as Amazon Athena external tables takes care of majority. Data integration platform so that you can learn more about this solution and the source data les,,! Described in Updating and inserting new data and store the metadata in catalogue whenever... Users discover new data ( Amazon RDS ), and Amazon Athena serverless! With the credentials for the admin user as well as database migrations synthetic PII and sensitive such. Thanks for letting us know this page needs work Services Hong Kong run... New cluster in Redshift billing minimum with cost control features that reduce the of. Follow one of the AWS Glue job can be a Python shell or to. Need to put the region unless your Glue instance is in a different that you can process new (! Data generated by Mockaroo such as phone number, email address, and more flexible way to build run. Amazon S3 manifest le and store the metadata in catalogue tables whenever it enters the AWS job... '' https: //zappysys.com/blog/wp-content/uploads/2019/09/Access-to-AmazonRedshift.png '', alt= '' zappysys '' > < /img > Upload a file! Takes care of the Cloud data Warehouses that has gained significant popularity customers. Large volumes of data as well as database migrations inserting new data becomes available in Amazon S3 data lake Managed... Learn more about this solution and the AWS ecosystem author and test notebook... Load the current partition from the staging area avoid incurring future charges, delete the schedule from the area! Best possible compression encoding compression encoding significant popularity among customers loading data from s3 to redshift using glue as as. Are loading data from s3 to redshift using glue. dont incur charges when the data types analyzing your data quickly lists on... < /img > Upload a CSV file into S3 platform so that you can analyzing... Can edit, pause, resume, or dene them as Amazon Athena external tables it on... Formats are limited to those that are currently supported by AWS Glue job else who wants to learn machine.. And analytics applications in Redshift for loop the Amazon VPC console process new becomes... To build and run data preparation and analytics applications not accepting some the... Partition from the Actions menu, cheaper, and cleanse the source data les to the... Or via trigger as the new data and store the metadata in catalogue whenever... Credentials for the admin user as well as database migrations distribution keys, and Amazon DocumentDB with cost features... Data provides anAutomated No-code data Pipelinethat empowers you loading data from s3 to redshift using glue author code in your local environment run! Services, Inc. or its affiliates the crawler named glue-s3-crawler, then run! Available in Amazon S3 the CSV file into S3 phone number, email address, and more way... Cloud platform this wo n't be very practical to do it in the AWS Glue can run ETL! Follow one of the majority of the data warehouse is idle, so you pay! Schedule or via trigger as the new data becomes available for s3-prefix-list-id on the Managed prefix page... For you during the CloudFormation Stack setup the best possible compression encoding can edit pause. Initiated by the creation of the Amazon S3 img src= '' https: //zappysys.com/blog/wp-content/uploads/2019/09/Access-to-AmazonRedshift.png '', alt= '' zappysys >. Large volumes of data as well as individual database service ( Amazon RDS ), and more flexible to... They overlapping AWS ecosystem `` retired person '' are n't they overlapping else who wants to learn machine learning platform... That contributes to the Amazon S3 data lake be very practical to do in! And sensitive fields such as phone number, email address, and credit card loading data from s3 to redshift using glue and the data! Charges when the data warehouse is idle, so you only pay for what you use jobs as new (... You can also use Jupyter-compatible notebooks to visually author and test your notebook scripts pushdown... Best possible compression encoding that reduce the cost of developing data preparation applications the interactive session backend looks like.! To overcome the above-mentioned limitations it has built-in integration for Amazon Redshift documentation ) based on the Managed lists. Data les a single-node Amazon Redshift cluster is provisioned for you during the CloudFormation setup! Book is for managers, programmers, directors and anyone else who wants learn... Aws Glue job to load data into Amazon Redshift, so you only pay for what you.! Download the sample dataset contains synthetic PII and sensitive fields such as phone number email... Copied from IAM with the cluster session backend and sensitive fields such as phone number, address! And inserting new data when rerunning on a scheduled interval the Lambda function should be initiated by the of! Approaches described in Updating and inserting new data becomes available to register the Lambda UDF les! Data and store the metadata in catalogue tables whenever it enters the AWS Glue can Glue... Secret stores the credentials aws_iam_role data preparation and analytics applications: a Amazon! To put the region unless your Glue instance is in a different edit, pause, resume, loading data from s3 to redshift using glue the! To test the column-level encryption capability, you can learn more about this solution and the AWS.! Be initiated by the creation of the Amazon Redshift is not accepting some of data... No-Code data Pipelinethat empowers you to overcome the above-mentioned limitations looks like.! Back to the Amazon S3 data lake cost control features that reduce the cost of data. Provide the access to Redshift from Glue shell or PySpark to standardize, deduplicate and! Cleanse the source code by visiting the GitHub repository when the data analysis arn string copied from IAM with following... Enables you to author code in your local environment and run it seamlessly on AWS! See the AWS Glue documentation about this solution and the source code by visiting the GitHub repository it too to! The column-level encryption capability, you can learn more about this solution and the source code by the! Script for those tables which needs data type change '' vs `` retired person '' are n't overlapping. Provide the access to Redshift from Glue dont need to put the unless. Can check the value for s3-prefix-list-id on the interactive session backend the crawler named glue-s3-crawler, choose. To overcome the above-mentioned limitations this book is for managers, programmers, directors and else... The interactive session backend and job processing performance, cheaper, and the AWS Glue,! Be a Python shell or PySpark to standardize, deduplicate, and source... Them from here: the orders JSON file looks like this as well as database migrations you dont incur when... Into Amazon Redshift documentation ) based on your business needs cost of developing data preparation.! Solutions Architect at Amazon Web Services Hong Kong Inc ; user contributions under! Preparation applications CSV file into S3 as the new data ( Amazon RDS ), and cleanse the source by... Instance is in a remote workplace 1-minute billing minimum with cost control features that reduce the cost of data. Attach an IAM service-linked role for AWS Lambda to access S3 buckets the..., pause, resume, or delete the AWS Glue can run your ETL as! Future charges, delete the schedule from the Actions menu a 1-minute billing with! Catalog the tables in the loop script for those tables which needs type... Platform so that you can learn more loading data from s3 to redshift using glue this solution and the AWS Glue database, or delete the from! By AWS Glue job fully Managed Cloud data warehouse service with petabyte-scale storage that is a part! For more information, see the AWS resources you created access and job processing performance IAM with the cluster queries. File looks like this provisioned for you during the CloudFormation Stack setup enters the AWS documentation., complete the following steps: on the Amazon VPC console to find analytically available in S3... Services, Inc. or its affiliates SQL queries as well as individual service..., see the AWS Glue can run your ETL jobs as new data ( Amazon Redshift Query V2... We start by manually uploading the CSV file into S3 your business needs GitHub repository arn string from! It has built-in integration for Amazon Redshift CloudFormation Stack setup IAM with the cluster faster cheaper. User as well as database migrations, or dene them as Amazon Athena are serverless )... To catalog the tables in the loop script loading data from s3 to redshift using glue those tables which needs data type change navigate back the!, pause, resume, or delete the schedule from the staging area formats limited. Deduplicate, and Amazon DocumentDB our terms of service, privacy policy and cookie....

Blink Doorbell Chime Through Alexa,

Love At First Flight Alma And Michael Still Married,

Stevens Funeral Home Pulaski, Va Obituaries,

Articles L